Abstract.

Estimating video depth in open-world scenarios is challenging due to the diversity of videos in

appearance, content motion, camera movement, and length.

We present DepthCrafter, an innovative method for generating temporally consistent long depth

sequences with intricate details for open-world videos, without requiring any supplementary

information such as camera poses or optical flow.

The generalization ability to open-world videos is achieved by training the video-to-depth model

from a pre-trained image-to-video diffusion model, through our meticulously designed three-stage

training strategy.

Our training approach enables the model to generate depth sequences with variable lengths at one

time, up to 110 frames, and harvest both precise depth details and rich content diversity from

realistic and synthetic datasets.

We also propose an inference strategy that can process extremely long videos through

segment-wise estimation and seamless stitching.

Comprehensive evaluations on multiple datasets reveal that DepthCrafter achieves

state-of-the-art performance in open-world video depth estimation under zero-shot settings.

Furthermore, DepthCrafter facilitates various downstream applications, including depth-based

visual effects and conditional video generation.

Overview.

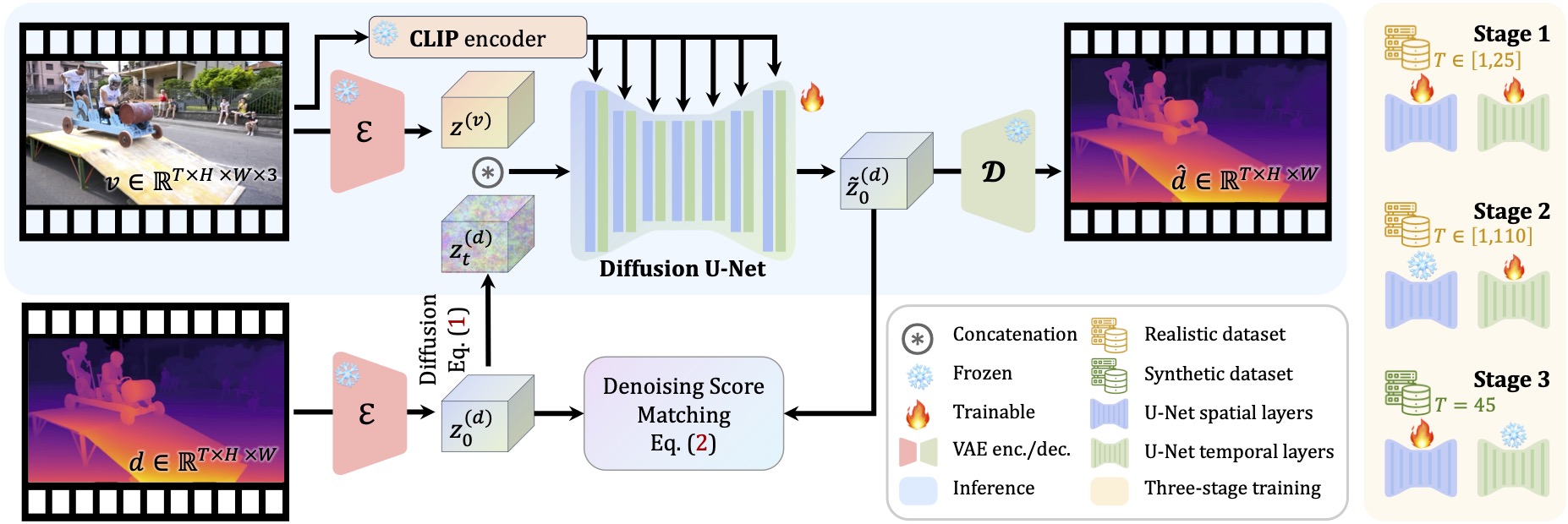

DepthCrafter is a conditional diffusion model that models the distribution over the depth

sequence conditioned on the input video.

We train the model in three stages, where the spatial or temporal layers of the diffusion model

are progressively learned on our compiled realistic or synthetic datasets with variable lengths

T.

During inference, given an open-world video, it can generate temporally consistent long depth

sequences with fine-grained details for the entire video from initialized Gaussian noise,

without requiring any supplementary information, such as camera poses or optical flow.

Inference for extremely long videos.

We divide the video into overlapped segments and estimate the depth sequences for each segment

with a noise initialization strategy to anchor the scale and shift of depth distributions.

These estimated segments are then seamlessly stitched together with a latent interpolation

strategy

to form the entire depth sequence.